Google BigQuery is a great big-data tool, which let you to store a huge amount of data in cloud, and manipulate it with SQL queries. But there are situations, when you want to get that data to your python environment for more advanced purposes like machine learning, which is possible thanks to numerous python libraries.

The fastest way to get data from BigQuery – is to make a API request directly to the BigQuery from your python environment. I prefer using Jupyter Notebooks because of its simplicity. To do that you need:

1. Create a Google Cloud Platform project and enable the Google BigQuery API :

https://console.cloud.google.com/flows/enableapi?apiid=bigquery

2. Create the service account, create and download a private key:

https://console.developers.google.com/iam-admin/serviceaccounts

3. Install the client library:pip install --upgrade google-cloud-bigquery

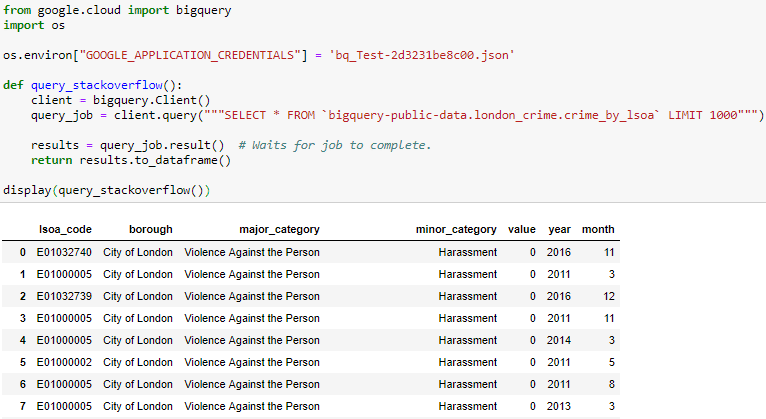

4. Run the code (replace ‘your_private_key.json’ with your private key)

from google.cloud import bigquery

import os

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = 'your_private_key.json'

def query_stackoverflow():

client = bigquery.Client()

query_job = client.query("""SELECT * FROM `bigquery-public-data.london_crime.crime_by_lsoa` LIMIT 1000""")

results = query_job.result() # Waits for job to complete.

return results.to_dataframe()

display(query_stackoverflow())You can get the desired table by changin the SQL query in the request like:

“SELECT * FROM table_you_need” so it will return you the beautiful pandas dataframe:

More info:

https://cloud.google.com/bigquery/docs/quickstarts/quickstart-client-libraries